9/28/2014

The annual SIGGRAPH conference, while best known for being a computer graphics conference, is in fact a conference and exhibition on “computer graphics and interactive techniques.” A SIGGRAPH organizer reached out to Harmonix to see if there was anything we’d like to present in the latter area for SIGGRAPH 2014, and I proposed a couple of ideas that were picked up. One of them (in addition to a segment of the “Real-Time Live!” event1) was a course entitled Kinect Technology in Games.

Speaking duties for the course were split between me and Andy Bastable, a Lead Programmer at Rare/Microsoft Studios. Andy and I opened with a basic overview of the Kinect camera and its technology. I followed with an overview of how we used the skeleton tracking capabilities of the Kinect in Disney Fantasia: Music Evolved. Andy finished with an explanation of how Rare used the image feed features of the Kinect to create the Champion feature in Kinect Sports Rivals.2

Below you’ll find an edited version of my slides and notes from my overview of our skeleton tracking in Fantasia. I’ve supplemented the notes with links and footnotes where appropriate. A few of the slides had video in them when originally presented; this has been noted below with a link to the video if available elsewhere online. It all seems a bit sterile in this format, unfortunately, but hopefully it retains some value!

[This slide contained a preview trailer for one of the worlds in our game. That trailer is viewable here.]

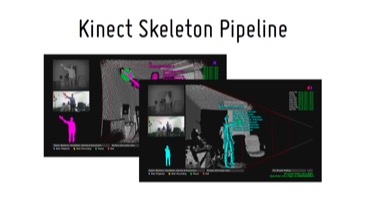

There’s a lot going on here that can be hard to discern the first time you see it. Basically, we have two main modes of play, which internally during development we called “Discovery” and “Performance.” In Discovery gameplay, you explore a musical space with a cursor that moves in three dimensions. In Performance gameplay, you match authored cues along with popular music in a rough imitation of a conductor. Both of these modes of gameplay were driven by an analysis of the Kinect’s skeleton pipeline data, rather than the image feed data Andy discussed in the introduction. I’m going to run you through the basics of that skeleton pipeline, and then we’ll get into some Fantasia specifics afterwards.

In my mind, the skeleton pipeline is what really makes the Kinect special, and more than just a camera. This is a toolkit built and provided by Microsoft, which can take the image feeds that Andy mentioned, process them with minimal latency, and give you back information about how players are standing in 3‑D space. Here are a couple screenshots of a debug tool, showing how the skeleton is represented in a space.

The way the underlying processing works is beyond the scope of this talk, but there are a few research papers that start to dig into the methodology, and they will really help you understand some of the Kinect’s quirks. I recommend them to anyone who plans to do any extensive Kinect development. They’re research papers, so they’re dense, but there are some important nuggets of information.

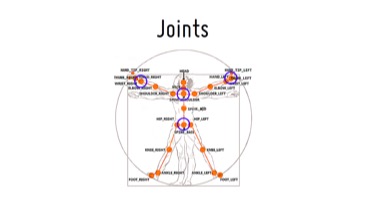

So let’s look at some data. What’s the sort of thing you’d get back from some skeleton API requests? The Kinect can track the locations of up to six people, telling you roughly where in the room people are standing. It can track the skeletons of two people, making it possible to have full-skeleton multiplayer gameplay. Thirty times per second, you can get information about where all the joints of those players are in 3‑D space. You also get confidence levels for these joint positions, and a couple other metrics like whether the hands are open or closed. There’s also a sitting skeleton pipeline, with fewer joints (and less processing cost).

These aren’t your literal skeletal joints; these are a handful of joints that make up a reduced skeleton representation. Here are the joints that the newer version of the Kinect camera (i.e., the Xbox One Kinect and Kinect For Windows 2) will provide.

There’s a ton of data here. And for a tiny spoiler, here are the joints we use for ~95% of Fantasia’s gameplay. [Here the blue circles appeared, indicating the joints to which I was referring.] I’ll get back to why that is.

Now, this all sounds fine and good, and I think you’re already dreaming up all sorts of ways you could use this data. But when you download the SDK, get everything set up, fire up the camera, and step in front of it—and here’s what I think trips up a lot of people—here’s what you see.

[This slide contained a video of the debug feeds of me walking around in front of a Kinect in my office.3 My skeleton flickers around, especially as I get near the edges of the frame, or as I put my hands up in front of me. It looks borderline useless.]

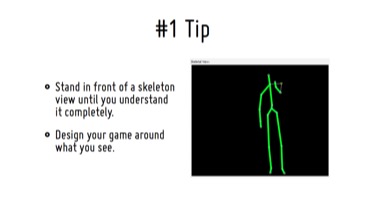

This is the most common complaint I think you’ll hear from people who have tried to mess around with the Kinect: that it’s just not “reliable.” And I think this is incorrect. The Kinect is very reliable, in that it reliably behaves this way. I’m not trying to be glib in saying that; this is an important thing to realize. If you know it’s going to behave this way, you can develop for that.

So, following from that, this is my number one tip for developing with the Kinect skeleton. Open up the demo app that just shows image and skeleton feeds, and stare at it. Walk around. Turn around. Have someone else come over and walk around. Hold your hands up. Wave your arms around. Point at the camera. Crouch down. Do all of this while very closely watching the skeleton, and you’ll start to see some interesting things.

You’ll see arm breakdowns as you point your arm right at the camera. You’ll see the head pop around if you obscure it (from the camera’s point of view) with your hand. You’ll see knees flop around and feet teleport up to knees if they’re obscured. You’ll also see that the hip and shoulder centers stay pretty stable, as though they’re averaged into the center of mass. You’ll see that a twist of the wrist will give some hand movement, even if it’s not 100% precise. You’ll see that leaning forwards and back is rough, but turning your shoulders is detected pretty well. You’ll see a lot of cool stuff like this, and you’ll start to see good ways to detect things and bad ways to detect things.

This is the important part. Design your game around what you see here, and you can make something that feels great, and really shows off the hardware. Design something in a vacuum and then try to use the Kinect to drive it, and you’ll get into trouble.

Here are some more general tips with regards to Kinect skeleton development.

1.) Make everything relative to a stable unit of body measurement. If you start doing detection based on absolute units (like meters), you’ll run into problems as soon as people who are a different size than you start playing your game. Start by getting a relative sense of how large the skeleton is, keep a running average of that (ultra-smoothed), and use that as your metric for measurements. I’ve found that that hip center and shoulder center work great, stability-wise, so I suggest looking at the relationship between those to determine player size.

2.) Anticipate when your gameplay will be getting you into trouble with hand, head, and other skeleton positions, and look for it in your algorithms. Look for knees that are bent back double. Look for elbows that are moving way too fast, especially when they’re “behind” the hands. Look for hands that quickly cross and uncross. When you see that stuff, you can predict that the player is actually below the camera view, or that the elbow should just be between the hand and the shoulder, or that the hands should just be pinned together like they’re clasped. If you know the failure cases, you can avoid them.

3.) Every interaction should be custom-coded. During early Fantasia development I built a quick toolkit for our designers to use. They could set up little constraints, like “when the hands are more than one body unit apart”, or “when one hand goes above its shoulder and the other hand is below the waist”. They could use those constraints to trigger gameplay results, and we scaffolded a bunch of gameplay up from this. This worked decently to try early things out, but by the time the game shipped, almost every single interaction and every single Performance gesture type was hand-coded by an engineer from the raw joint data. Every one needed its own smoothing algorithm and coefficients to handle the type of movement that was happening and the responsiveness it required. Other interactions wanted the joints in different coordinate spaces, or needed to handle different breakdown scenarios (like holding hands together) in smart ways. It was when we really got down to the metal that we could make them feel polished.

4.) Always, always, always give the player feedback. The player needs to be supremely confident in the system; as soon as they think the camera isn’t “getting” them, they’re a lost cause. Let them know when they’re off to the side, and how they should step back towards the center. Let them know the camera lost them and something needs to change. If their hand is controlling something, have it control it (or something else in the scene) in an analog fashion, so they see small changes happening as they move. This is incredibly important, and I’ll show a lot of this in the context of Fantasia later.

Now it’s time to go into some case studies from Fantasia: Music Evolved. I’ll talk a bit about the major pieces of the Discovery gameplay mode, and I’ll talk a bit about the gesture system that makes up the Performance gameplay mode. To start that off, here’s a clip of some basic Discovery gameplay.

[This slide contained a video of some Discovery gameplay, viewable here starting at the 45-second mark.]

You’ve got a diorama-like space, in which you can step left and right to explore. Your hand controls the “Muse,” a tool given to you by the sorcerer Yen Sid, which for gameplay purposes is just a cursor that moves in three dimensions. You can push it down into the back of the scene, and pull it up to the front of the scene. Within the scene, you can bring up the arrows you saw and zoom into other interactions within the scene, where manipulating the environment changes the music you hear.

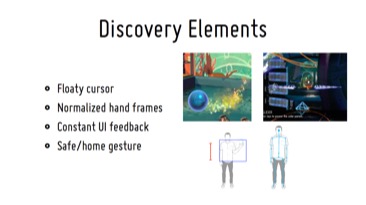

Here are some important elements of our Kinect usage in Discovery gameplay.

1.) We tested out a quicker, more reactive cursor, but the jitter was too much. We low-pass out the high frequency hand noise, and we get a really floaty feel out of it. In many application contexts, a mushy, smoothed out cursor would be terrible, but by couching the cursor in the narrative framework of a magic orb, floaty is the way you expect it to feel. This is a good example of designing your game around the tech that you have, as I mentioned earlier. As soon as the expectation matched the technical capability, it started to feel great.

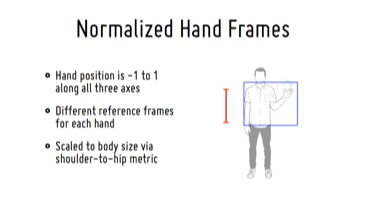

2.) We normalized the player’s hand position into a -1 to 1 space along all three axes, and then reprojected this space into the scenes as dictated by their geometry. This let all players feel comfortable moving the cursor given their arm sizes, but also let us map well into our variety of differently-shaped scenes. Each hand has a different normalized space, which is dictated by the angle at which the player faces the camera, the normalized body metric I mentioned before, and a center point roughly at chest level, halfway between the center of their body and the corresponding shoulder. This does mean that if you were to have a cursor for each hand on the screen at the same time, touching your hands together wouldn’t touch the cursors together, but we don’t have that scenario very often in the game.

3.) The node zoom-in gesture is communicating a ton of information. You know when you’re hovering over it. You know when you’ve brought your hand up to engage it. You know when you’re locked in that mode, because the cursor goes away. You see every movement of your hands thereafter affect the movement of the arrows, which mirrors them. The whole time you’re seeing everything you do reflected in the UI. We even bring the arrows up to the center of the screen when you lock in, which encourages the player to bring their hands up to center in response. When the player’s hands are up in the center of their body, we have a much more stable pose from which to detect arm spreading.

4.) Dropping your hands to your sides is always a “safe” gesture. It will cancel whatever mode you’re in, it will remove the cursor from the scene, and it won’t affect anything currently going on in the scene. You might get a little downward cursor travel, but people drop their arms pretty quickly. It’s a non-threatening reset, which is a great fallback for a confused player.

So there’s an overview of the basic Discovery mechanics. Each of our ten worlds has two or more areas to zoom into that offer more gesture gameplay, which are the custom-coded interactions I mentioned before. Here’s a sample of some of those.

[There was a video in this slide showing the diversity of Discovery interactions in Fantasia, similar to the one our co-founder Alex Rigopulos included in his PAX East 2014 keynote speech. That video is viewable here at 43m59s.]

Nearly all of those varied interactions include custom code Kinect-handling code. You may notice that this game is very strange. It’s crazy that someone let us make it.

So, onto Performance. This is the more “gamey” of our modes, with a more traditional framework, and therefore the one that’s a little easier to understand. One more video, to remind you what Performance gameplay is like:

[There was a video in this slide showing a segment of Performance gameplay from the earlier full-game video. That video is viewable here, from the 21-second mark to the 1-minute mark.]

The main detection method in this gameplay mode is based upon relative velocities of the player’s hands. There’s no precise positioning required here, which is actually quite freeing for the player and opens up a lot of opportunities for people playing the game differently. You can wave your arms wildly as you play, or you can keep them close to your body and be very precise.

Here are the building blocks we used to build our authoring system.

First is the “swipe” cue. To hit one of these, you move your hand over a speed threshold in the matching direction. There’s pass or fail feedback at the moment of the cue, but not much other feedback.

Second is the “push” cue. To hit one of these, you move your hand over a speed threshold towards the Kinect camera. There’s only pass or fail feedback on this one, as well. They’re both fairly instantaneous cues.

Third is the “hold” cue. After a push or swipe, you keep your hand in the same place for some period of time. We show you how much time you have left in the hold, and where your hand is in the “safe” zone relative to where it started in that time period. At the end you get pass or fail feedback.

Fourth is the “path” cue. To hit one of these, you move your hand in the direction tangent to the current position along the path. We show you instantaneous quality of your movement, as well as pass or fail feedback for each segment of the path and the overall path.

Last is the “choice” cue, which is a bit of a special case. At key moments of the song, you choose one of three remixes to apply to a particular track (drums, vocals, etc.). The choice gesture is a combination of a push and a swipe. The push gets the player to roughly center and stabilize their hand, and then they swipe in one of the three directions to choose a remix. Without the push, we found that players would have a big wind-up before they would do the swipe, and the wind up itself would fire off some of the other detectors, choosing the wrong remix. Adding the center push encouraged the correct behavior, with no wind-up.

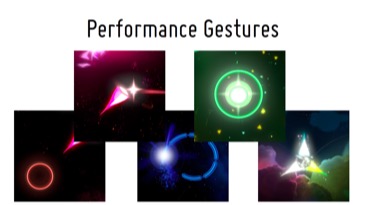

Earlier I emphasized that you always have to give the player feedback, and it may seem that this mode falls short. I.e., some of these authoring bits here only show you if you succeeded or failed after the fact. This is where our ambient layers come into effect. Behind these cues, there’s a layer of smoke, particles, and other effects work that are constantly moving in response to the players’ hand movement. It’s a bit hard to see here in comparison to the video, but it’s the little velocity-stretched bits back behind the swipe and hold cues. These let the player know the camera sees them and that their hands are being picked up, and ultimately it’s just a fun thing to play with as well.

We also have the silhouette at the bottom of the screen, which is a pretty blunt way of giving you feedback. Luckily, silhouettes were a big part of the aesthetic of the original Fantasia film, so we’re still living within the realm of the source material. We can show you where we think your body is and where we think your hands are, as well as which one of your hands seems to be hitting the cues (you can use either!).

Note that none of these cues require you to point specifically at things on the screen, or have your hand in a specific place relative to the rest of your body. This allows for the variety of performance I mentioned before; all you need to be doing is moving your hand in some direction. These were the most reliable of the elements we designed, and with this set of cues we were able to form some very interesting, expressive authoring across the songs in the game.

So there’s a quick summary of the way we used the Kinect skeleton pipeline for Fantasia. Andy will talk for a bit about how they used the Kinect image feeds for Kinect Sports Rivals, and then we’ll take some questions. If you’d prefer, you can get in touch with me at these addresses, as well. Thanks!

-

As per the official description, “SIGGRAPH Real-Time Live! shows off the latest trends and techniques for pushing the boundaries of interactive visuals.” A compilation video of the submissions in this year’s event can be seen here. ↩︎

-

There’s a nice (though non-technical) interview about the Champion feature with Andy and another Rare developer here, including a video of the feature. ↩︎

-

Yes, there’s a pink neon-lit Hannah Montana guitar on the wall of our office. That’s Devon’s. ↩︎

Hi, everyone! Thanks for coming out to our Kinect talk today.

My name’s Mike Fitzgerald, and I’m a programmer at Harmonix Music Systems, in Cambridge, Massachusetts. We’re best known for creating Guitar Hero and Rock Band, as well as Dance Central in the Kinect space. I personally worked on Rock Band 2, The Beatles: Rock Band, and Rock Band 3, before starting development of Fantasia: Music Evolved in early 2011. In case you’re unfamiliar with the game, here’s a brief clip to give you a taste of Fantasia.